Upload a File to S3 Fails Pyhton

AWS Simple Storage Service (S3) is past far the virtually popular service on AWS. The simplicity and scalability of S3 made information technology a go-to platform not only for storing objects, merely also to host them as static websites, serve ML models, provide fill-in functionality, and so much more. Information technology became the simplest solution for consequence-driven processing of images, video, and audio files, and even matured to a de-facto replacement of Hadoop for large data processing. In this commodity, nosotros'll look at various ways to leverage the power of S3 in Python.

**Some utilise cases may really surprise you !

Note: each code snippet beneath includes a link to a GitHub Gist shown as: (Gist).

1. Reading objects without downloading them

Imagine that you want to read a CSV file into a Pandas dataframe without downloading information technology. Here is how you can straight read the object'due south torso directly as a Pandas dataframe (Gist):

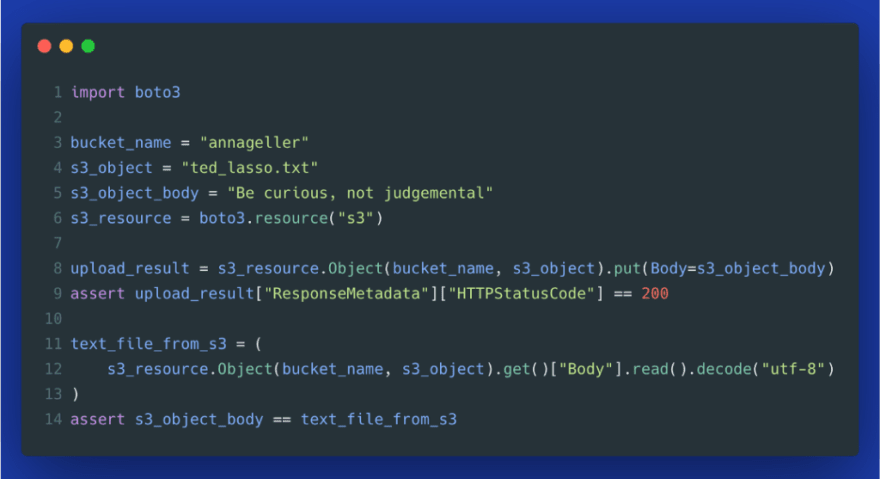

Similarly, if y'all want to upload and read small pieces of textual information such as quotes, tweets, or news articles, you can do that using the S3 resource method put(), as demonstrated in the instance below (Gist).

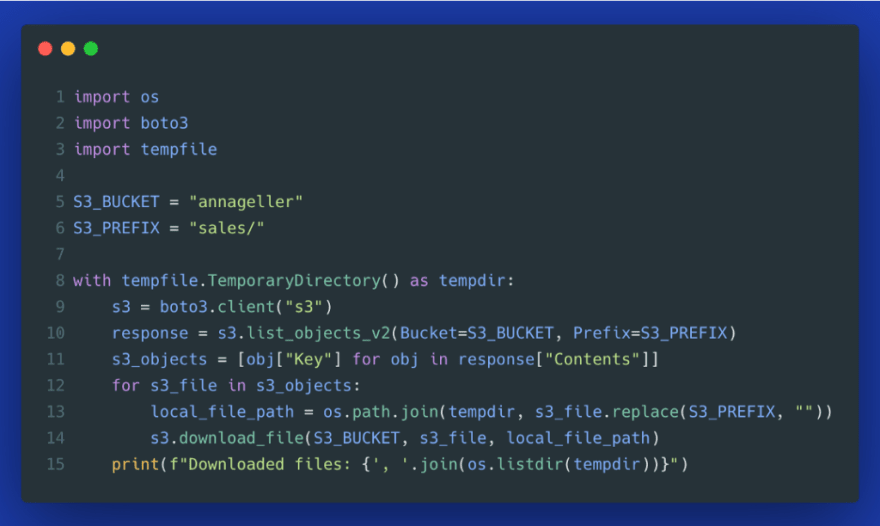

ii. Downloading files to a temporary directory

Every bit an alternative to reading files direct, you could download all files that you need to process into a temporary directory. This tin be useful when you have to extract a large number of pocket-sized files from a specific S3 directory (ex. near existent-time streaming data), concatenate all this data together, and then load it to a data warehouse or database in i go. Many analytical databases can procedure larger batches of information more efficiently than performing lots of tiny loads. Therefore, downloading and processing files, and then opening a single database connection for the Load part of ETL, can make the process more robust and efficient.

By using a temporary directory, you can be sure that no state is left backside if your script crashes in between (Gist).

3. Specifying content type when uploading files

Often when nosotros upload files to S3, nosotros don't call back about the metadata backside that object. Howeve*r, doing it explicitly has some advantages*. Let's look at an instance.

Starting from line nine, we offset upload a CSV file without explicitly specifying the content type. When we then check how this object's metadata has been stored, we detect out that it was labeled every bit binary/octet-stream. Typically, nigh files will exist labeled correctly based on the file's extension, merely issues like this may happen unless we specify the content type explicitly.

Starting from line 21, we do the same but we explicitly laissez passer text/csv equally content blazon. HeadObject operation confirms that metadata is now correct (Gist).

iv. Retrieving but objects with a specific content-type

You may ask: what do good do we become past explicitly specifying the content type in ExtraArgs? In the example below, nosotros try to filter for all CSV files (Gist).

This will return a list of ObjectSummary objects that friction match this content-type:

Out[two]:

[s3.ObjectSummary(bucket_name='annageller', key='sales/customers.csv')]

If we hadn't specified the content type explicitly, this file wouldn't accept been institute.

5. Hosting a static HTML report

S3 is not merely good at storing objects but as well hosting them every bit static websites. Kickoff, nosotros create an S3 bucket that can have publicly available objects.

Turning off the "Block all public access" feature --- prototype by author

Then, we generate an HTML page from any Pandas dataframe you want to share with others, and we upload this HTML file to S3. This way, we managed to build a simple tabular study that we can share with others (Gist).

If you did not configure your S3 saucepan to allow public access, you volition receive S3UploadFailedError:

boto3.exceptions.S3UploadFailedError: Failed to upload sales_report.html to annageller/sales_report.html: An mistake occurred (AccessDenied) when calling the PutObject operation: Admission Denied

To solve this problem, you can either enable public access for specific files on this bucket, or you can use presigned URLs as shown in the section beneath.

vi. Generating presigned URLs for temporary access

When you generate a report, it may comprise sensitive data. You may not want to allow admission to everybody in the world to look at your business organisation reports. To solve this event, y'all can leverage an S3 feature called presigned URLs that allow granting permissions to a specific S3 object past embedding a temporary credential token direct into the URL. Here is the same case from above, but now using a private S3 bucket (with "Block all public access" set to "On") and a presigned URL (Gist).

The URL, created by the script above, should look similar to this:

Presigned URL --- image past author

vii. Uploading large files with multipart upload

Uploading big files to S3 at one time has a pregnant disadvantage: if the process fails close to the finish line, you demand to kickoff entirely from scratch. Additionally, the process is not parallelizable. AWS approached this problem by offering multipart uploads. This process breaks down large files into face-to-face portions (parts). Each function can be uploaded in parallel using multiple threads, which tin can significantly speed upward the process. Additionally, if the upload of any function fails due to network issues (packet loss), information technology can be retransmitted without affecting other parts.

To leverage multi-office uploads in Python, boto3 provides a course TransferConfig in the module boto3.s3.transfer. The caveat is that you actually don't demand to use it past hand. Any time you lot use the S3 client's method upload_file(), it automatically leverages multipart uploads for large files. But if you want to optimize your uploads, you tin can change the default parameters of TransferConfig to:

- ready a custom number of threads,

- disable multithreading,

- specify a custom threshold from which boto3 should switch to multipart uploads.

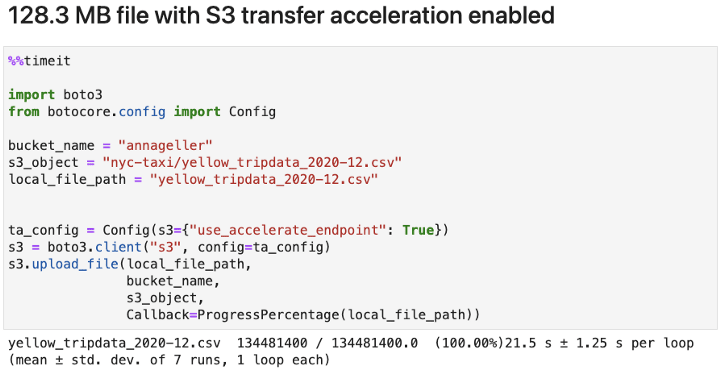

Let's test the performance of several transfer configuration options. In the images below, you tin can run into the fourth dimension it took to upload a 128.3 MB file from the New York Metropolis Taxi dataset:

- using the default configuration,

- specifying a custom number of threads,

- disabling multipart uploads.

Nosotros can see from the image higher up that when using a relatively slow WiFi network, the default configuration provided the fastest upload result. In dissimilarity, when using a faster network, parallelization across more threads turned out to be slightly faster.

Regardless of your network speed, using the default configuration seems to exist good enough for virtually utilize cases. Multi-part upload did help speed up the operation, and adding more threads did non help. This might vary depending on the file size and stability of your network. Just from the experiment above we can infer that it'south all-time to just utilise s3.upload_file() without manually changing the transfer configuration. Boto3 takes intendance of that well enough under the hood. But if you really are looking into speeding the S3 file transfer, have a look at the section below.

Annotation: the ProgressPercentage, passed as Callback, is a class taken directly from the boto3 docs. It allows us to see a progress bar during the upload.

8. Making use of S3 Transfer Acceleration

In the last section, we looked at using multipart uploads to meliorate operation. AWS provides another feature that tin help us upload big files called S3 Transfer Acceleration. It allows to speed up uploads (PUTs) and downloads (GETs) over long distances between applications or users sending data and the S3 bucket storing information. Instead of sending data directly to the target location, we end up sending information technology to an edge location closer to us and AWS will then ship it in an optimized way from the edge location to the end destination.

Why is it an "optimized" way? Considering AWS is moving data solely within the AWS network, i.e. from the edge location to the target destination in a specific AWS region.

When can we gain significant benefits using S3 Transfer Acceleration?

- when we are sending large objects --- typically more than than one GB,

- when nosotros are sending information over long distances, ex. from the region eu-central-1 to us-east-i.

To enable this feature, go to "Properties" within your S3 bucket folio and select "Enable":

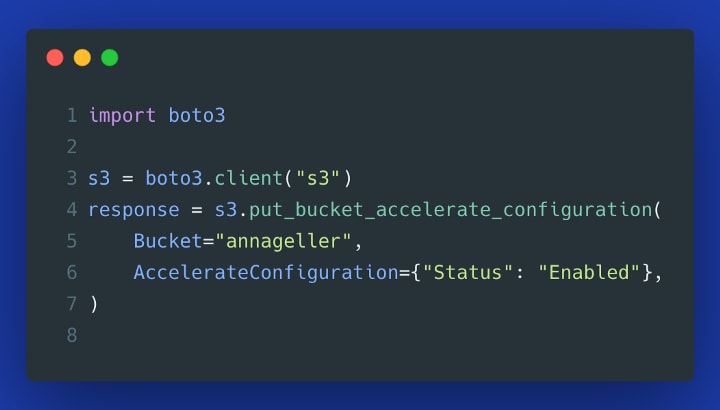

Alternatively, you lot tin enable this characteristic from Python (Gist):

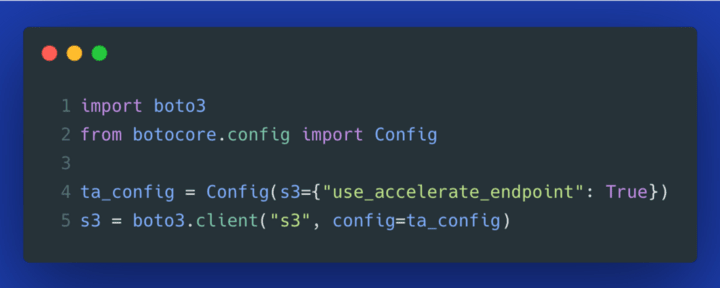

To use this feature in boto3, we need to enable information technology on the S3 client object (Gist):

Now we tin can test the performance. Kickoff, let's test the same file from the previous section. We can barely see whatsoever comeback.

When comparing the performance between purely doing a multipart upload, and additionally turning on the S3 Transfer Acceleration, we can come across that the performance gains are tiny, regardless of the object size nosotros examined. Below is the aforementioned experiment using a larger file (1.6 GB in size).

In our instance, we were sending information from Berlin to the eu-central-1 region located in Frankfurt (Germany). The nearest edge location seems to exist located in Hamburg. The distances are rather short. If nosotros had to send the same ane.half-dozen GB file to a US region, and then Transfer Dispatch could provide a more noticeable advantage.

TL;DR for optimizing upload and download performance using Boto3:

- you tin safely utilize the default configuration of s3_client.upload_file() in most use cases --- information technology has sensible defaults for multipart uploads and irresolute anything in the TransferConfig did not provide any significant advantage in our experiment,

- apply S3 Transfer Acceleration only when sending large files over long distances, i.eastward. cross-region data transfers of particularly big files.

Note: enabling S3 Transfer Dispatch can incur boosted data transfer costs.

How to monitor performance bottlenecks in your scripts?

In the last two sections, nosotros looked at how to optimize S3 data transfer. If you want to runway further operational bottlenecks in your serverless resource, you may explore Dashbird --- an observability platform to monitor serverless workloads. You lot tin use it to examine the execution time of your serverless functions, SQS queues, SNS topics, DynamoDB tables, Kinesis streams, and more than. It provides many visualizations and aggregated views on pinnacle of your CloudWatch logs out of the box. If you lot want to get started with the platform, you can discover more than data hither.

Cheers for reading!

Resources and further reading:

AWS Docs: Multipart uploads

Boto3: S3 Examples

Boto3: Presigned URLs

Explaining boto3: How to use whatever AWS service with Python

Log-based monitoring for AWS Lambda

Source: https://dev.to/dashbird/8-tricks-to-use-s3-more-effectively-with-python-ck0

0 Response to "Upload a File to S3 Fails Pyhton"

Post a Comment